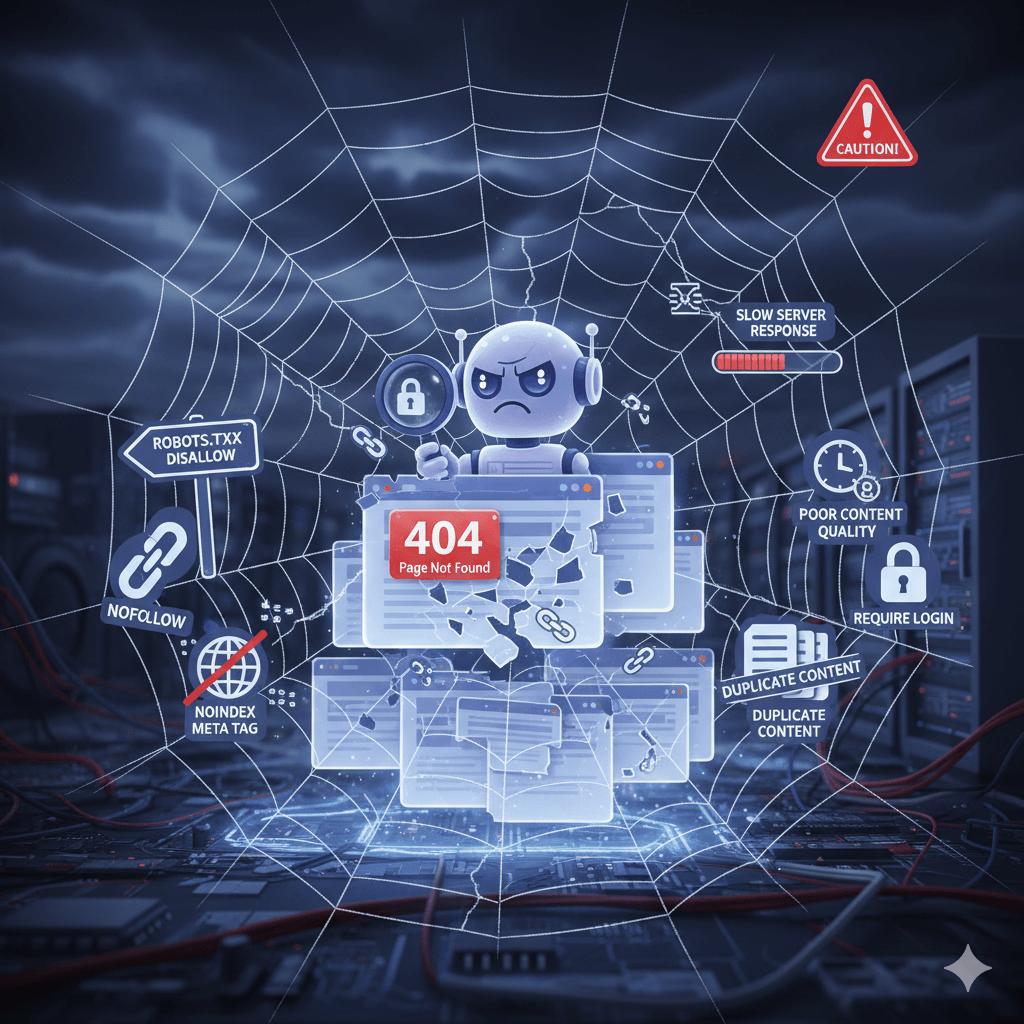

When you publish a new website or page, you normally expect to see it live on Google after one or two days. You published your page, submitted it for indexing in the Google Search Console, waited a few days… still nothing happens. Your page is nowhere to be seen on Google. Frustrated, you go back to Search Console and look for pages — and there it is: your page is not indexed, the page is crawled but not indexed. Have you ever encountered a similar issue? If so, you will find this blog useful. Here we are going to discuss common issues that prevent your page from getting indexed and possible fixes.

Newer Page

Oftentimes, newer pages and websites take a lot longer than you expect to get indexed. It can take anywhere between 2 days to sometimes a whole week. So wait it out a bit.

Blocked by robots.txt

The robots.txt file of your page tells Google spiders whether to enter and crawl a page or not to enter a particular page. Brand new websites and newly added pages are often blocked by the robots.txt file to prevent indexing by developers during their testing phase. You need to make sure that the page is not blocked by robots.txt before submitting it for indexing.

Thin / Duplicate Content

If your website has thin content with little text, that can be a reason that prevents indexing of your page. Make sure the ratio of text content on your page is comparatively higher than code. Use original content — duplicate and plagiarized content are not valued by Google bots and might get rejected from indexing. Try to keep every page at least 600 words long to not fall into the category of thin content.

Lack of Internal Links

A website can also fail to get indexed due to poor internal linking. Think of it this way — your website is like a map. Search engines need clarity to navigate the space (by way of links) from link to link, page to page, and site to site. If new pages are not linked to the homepage or the main sections of the website, then it’s possible that Google may not find them. By adding appropriate internal links to your new page, your users and crawlers can better align with your page and site as a whole. Linking your newer page with already existing pages solves this issue.

Redirection Errors

A significant cause of indexing issues is redirection errors. Constantly redirecting pages from one URL to another, or having redirect loops (e.g., Page A redirects to Page B, then Page B redirects back to Page A) can cause Google’s crawler to stop following links altogether. Issues such as too many redirects, or redirects pointing to broken pages (404), can also result in the link being dropped from Google’s index. Therefore, be sure to routinely review your redirects and ensure they take users and bots to the correct locations.

Poor Server Responses

When search engines like Google attempt to access your site, the first place they connect to is the server — the computer hosting your site. If the server doesn’t respond appropriately or in a timely manner, Google’s crawlers can’t read your pages, which can cause issues with indexing or rankings.

Poor server responses usually appear in Google Search Console as 5xx errors (like 500, 502, 503, or 504).

Noindex Tag

A noindex tag instructs search engines at the page level:

“This page is accessible, but do not display it in search results. “When it is inserted into a page’s HTML code, Google can read the content and crawl the page, but it won’t index it or show it in search results.

Removing the noindex tag is pretty straightforward in CMS platforms like WordPress, Wix, Shopify, etc. For custom-coded sites, you have to edit the HTML code in the head section and delete the noindex part of the entire tag, then save and re-upload the file.

Sitemap Accuracy and Crawl Budget

Your XML sitemap serves as a guide for search engines. It contains the list of all URLs on the website. It indicates to Google which pages on your website are important and should be crawled and indexed.

When you have deleted, broken, and redirected links in your sitemap, you will get a 404 error.

Here’s why that is an issue:

- If your sitemap is still telling Googlebot to visit deleted pages, the bot will waste time following links to 404 (Not Found) or 410 (Gone) error messages.

This means that less and less time is spent crawling your live, useful pages and the discovery process for new or updated content is slower. - It Provides Confusing Signals to Google

When Google discovers broken or deleted URLs within your sitemap, it receives confusing signals regarding the trustworthiness and organization of your site.

A sitemap is designed to inform the search engine of the most current version of your site. When there is an obsolete link, Google’s perception may indicate the site is poorly maintained and technically untrustworthy; thus, a small amount of credibility can be lost in terms of SEO. - It Messes Up the Search Console Reports

If you have deleted pages that are not removed from your sitemap, Google Search Console will continue to show indexing errors like:

- “Submitted URL not found (404)”

- “Submitted URL marked ‘noindex’”

- “Submitted URL not found (404)”

- This will clutter your reports, making it challenging to find real issues to address. Therefore, cleaning up old URLs provides you with an accurate, clean indexing report.

Confusion of Redirections

When you delete a page, you sometimes redirect it to an entirely new page (typically a 301 redirect). If you hang onto the page and place a redirect from the old URL to a new URL, then Google may try to index the old and new versions, creating a duplicate or canonical issue. Cleaning out your sitemap allows Google to only focus on the correct, active URLs.

Canonical Tag Errors

Canonical tag mistakes can confuse Google. A canonical tag essentially provides a signal to search engines on which version of a page is considered “the one.” (This is most relevant when there are duplicates or other very similar pages).

If you mistakenly point the canonical tag to the wrong page — or if every page has the same canonical URL — Google might skip indexing some pages altogether.

Final Thoughts

Most of the indexing issues can be clearly spotted by examining Google Search Console results. Some of these errors are more time-consuming and require more attention to fix them, while messages like “Discovered – currently not indexed” or “Crawled – currently not indexed” often resolve themselves or by a quick URL resubmission in the Google Search Console.

Keep an eye on technical issues, ensure your site is lightweight, mobile-friendly, and fast. Make sure content is original and of high quality — all such steps combined ensure that your pages get indexed faster.